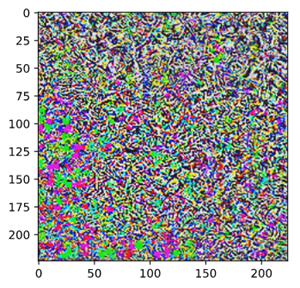

What is an Adversarial Attack?

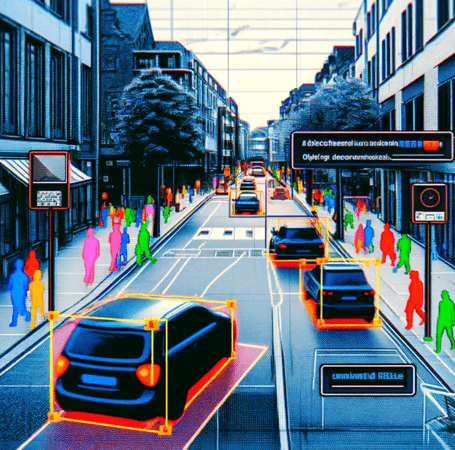

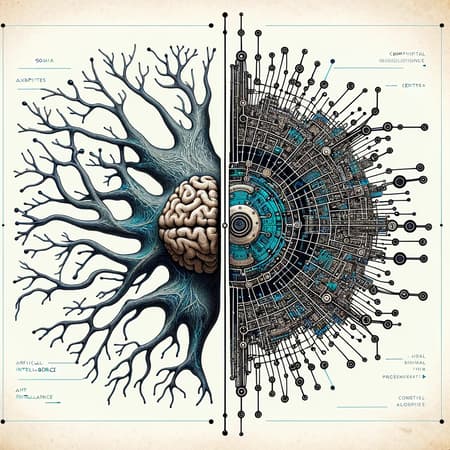

Adversarial attacks in AI involve intentionally creating inputs that cause AI models to make mistakes. These attacks are not random; they are carefully crafted to exploit specific vulnerabilities in an AI system. Adversarial inputs in Computer Vision can often go undetected because they are normally not visible to the human eye; in language, adversarial input looks like gibberish. By understanding and simulating these attacks, we can gain invaluable insights into how AI models might fail in the real world, particularly under malicious conditions.