This case study overviews a approach for a client in the security industry who must remain confidential. We have therefore taken care to ensure nothing sensitive has been revealed. This page can be used to understand the general approach taken by Advai to assure computer vision systems.

15 Mar 2023

Assuring Computer Vision in the Security Industry

Words by

Alex CarruthersAssuring Computer Vision in the Security Industry

- Advai's Challenge:

- Assess the performance, security, and robustness of an AI model.

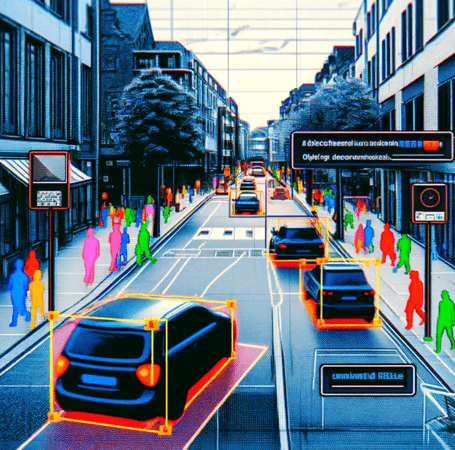

- The AI model is used for a computer-vision object-detection system.

- The system needs to be robust enough to: a) Detect a variety of objects in a busy, low-quality visual environment. b) Withstand adversarial manipulation.

- Keywords:

- Computer vision, recognition, classification, images, data selection, attack, ROI, synthetic, security, object detection, out of sample, edge cases, accuracy, bias.

- Assessment Categories:

- Data, Models, and Packaging were identified as potential weaknesses.

- Actions Taken:

- Data Screening: Analysis of the labelled training data to detect any imbalances.

- Out of Sample analysis: Tests to see how the model's ability to recognise objects can be reduced by disturbances.

- Adversarial Attack (AA) Analysis: Assesses how attackers could seek to win information or the process of attacking a system.

- Observations:

- Issues with data attributes such as dataset balance, representation, and inclusion of misleading labelling that negatively impacted model performance.

- Problems when operating outside the training data's range, leading to vulnerabilities in some objects and situations.

- Information intentionally overlooked by the AI that would enable users to reverse engineer the model or deduce technical or business logic.

- Recommendations:

- Suggested training data augmentations that could improve model robustness.

- Recommending more edge case handling and labelling "no-class" objects among general labelling hygiene factors.

- Scope modelling to ensure the AI knows when situations are outside its scope.

- Security vulnerabilities of the AI were reported with the recommendation that the AI's physical container be secured to ensure data and analyses are kept confidential.

Who are Advai?

Advai is a deep tech AI start-up based in the UK that has spent several years working with UK government and defence to understand and develop tooling for testing and validating AI in a manner that allows for KPIs to be derived throughout its lifecycle that allows data scientists, engineers, and decision makers to be able to quantify risks and deploy AI in a safe, responsible, and trustworthy manner.

If you would like to discuss this in more detail, please reach out to [email protected]