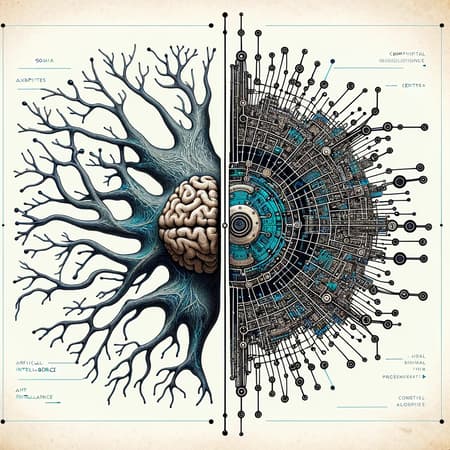

The AI revolution is here, but its success hinges on trust and safety. The UK’s AI Opportunities Action Plan presents a bold vision, yet more must be done before security and policing can fully harness AI’s potential.

The plan prioritises infrastructure—compute power, energy, and data centres. While essential, these alone won’t drive progress. The real challenge lies in bridging the gap between testing and deployment. Without rigorous testing, there’s no assurance; without assurance, deployment remains risky; and without deployment, AI’s benefits go unrealised.

As Peter Kyle put it, “Britain at its best encourages innovation but always has safety baked in from the outset.” For AI in security and policing, safety isn’t optional—it’s the foundation for trust, adoption, and growth.

Trust: The Barrier to AI Adoption

Despite AI’s rapid progress, its adoption across the UK has been slow—not due to a lack of interest but a lack of trust. Decision-makers hesitate because key questions remain unanswered:

- Can AI reliably and ethically enhance public safety?

- What security issues exist in these systems?

- What standards will we be held accountable to?

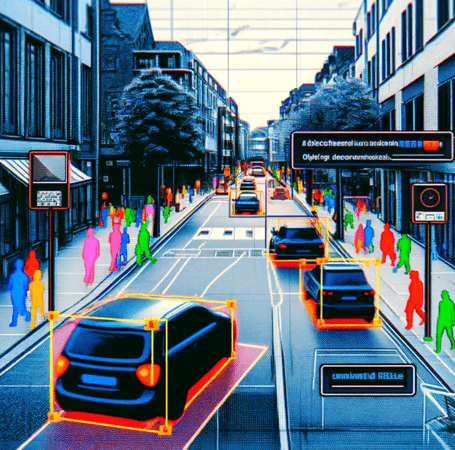

In high-stakes environments, trust is everything. AI systems must undergo rigorous testing and assurance to prove their reliability before real-world deployment. Without this, AI’s promise remains unfulfilled, and the risks of untested systems outweigh potential benefits.

Testing: The Engine of Progress

The AI Opportunities Action Plan calls for government-backed assurance tools to assess AI performance. In security and policing, these tools aren’t just useful—they’re essential. Testing ensures AI meets operational, regulatory, and ethical standards while adapting to evolving threats.

To unlock AI’s full potential, the security sector needs clear, actionable guidance on evaluation and assurance. This isn’t just about regulation; it’s about building a framework that instils confidence and enables large-scale deployment.

Key Actions for AI Assurance

To make AI a trusted asset in security and policing, the UK must focus on the following:

- Defining Evaluation Metrics Clear benchmarks on performance, reliability, security and non-technical considerations (e.g. ethical) provide measurable pathways for improvement and fair comparisons across systems.

- Establishing Universal Standards A consistent, reliable testing framework allows users and suppliers to understand AI capabilities, limitations, and compliance with ethical and operational requirements.

- Government Support Investment in third-party evaluators, assurance tools, and public-private partnerships will accelerate AI adoption while maintaining oversight.

- Integrating Standards into Procurement AI evaluation frameworks should be embedded into procurement processes to ensure only rigorously tested systems are deployed. Public sector agencies can set the precedent for trust and adoption.

The UK’s Opportunity to Lead

The UK has the chance to be a global leader in AI-driven security and policing—but success depends on a relentless focus on the basics: test, assure, deploy. For sectors where public trust and operational reliability are paramount, this process is non-negotiable.

The AI Opportunities Action Plan provides a framework for immediate action. Investing in assurance capabilities today will deliver tangible results even as larger infrastructure projects take shape. Supporting companies that specialise in AI safety will not only strengthen domestic capabilities but also position the UK as a global leader in trusted AI innovation.

This is a defining moment. To unlock AI’s full potential, we must prioritise rigorous testing and assurance. When safety and innovation go hand in hand, AI can enhance public safety, improve operational efficiency, and strengthen national security.

The hard work begins now. Testing, assurance, and deployment aren’t just steps in the process—they are the foundation of AI’s value in security and policing, ensuring progress without compromising safety.