Welcome to the Era of AI 2.0

This article was originally published on LinkedIn: `

Progress can feel incremental, don’t you think? It’s subtle and, like the proverbial frog in slowly heating water, can go mostly unnoticed.

Did you notice?

Did you notice the moment when we entered the era of AI 2.0?

If you didn’t then it’s important you now take note, because taking full advantage and avoiding pitfalls of AI 2.0 will requires your full attention!

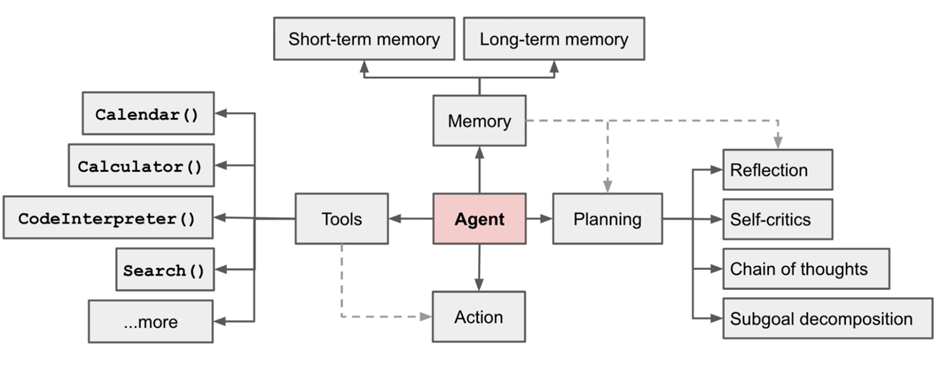

We must collectively recognise the incredible, pivotal moment, so we can

a) capitalise on the economic opportunity presented, and

b) exercise the caution and foresight needed to meet a new age of safety challenges.