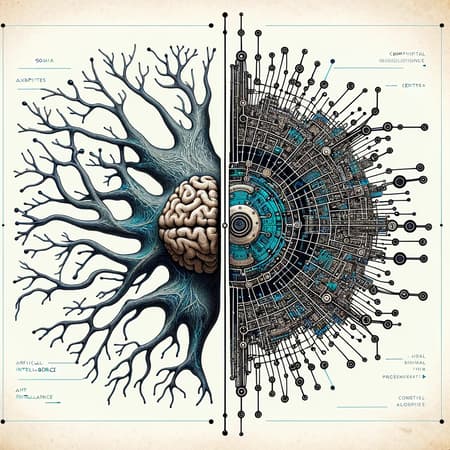

The main reason Responsible AI is important is that it ultimately protects the organisations that use or create AI, and also the people that are impacted by Ai driven decisions. Leaders in AI are looking to adopt Responsible AI as a process by which they can demonstrate that they are addressing some of the fundamental concerns, issues and limitations associated with AI. Not just on a technical basis but also in a way that aligns its use with a company’s culture and ethical responsibilities.

Responsible AI will also be necessary where there is a need to demonstrate AI systems and organizational practices fulfil the requirements of agreed-upon regulations, standards, best practices, and laws (such as the EU AI Act). With the advent of new regulations, directives, and principles for how to use AI being released in different regions, having a demonstrable governance framework that looks to address the risks of AI and provide trust, will go from being optional to a requirement.

Responsible AI is also a step by which organisation can protect themselves from legal issues, reputational damage, and many more risks associated with the automation of decision making.